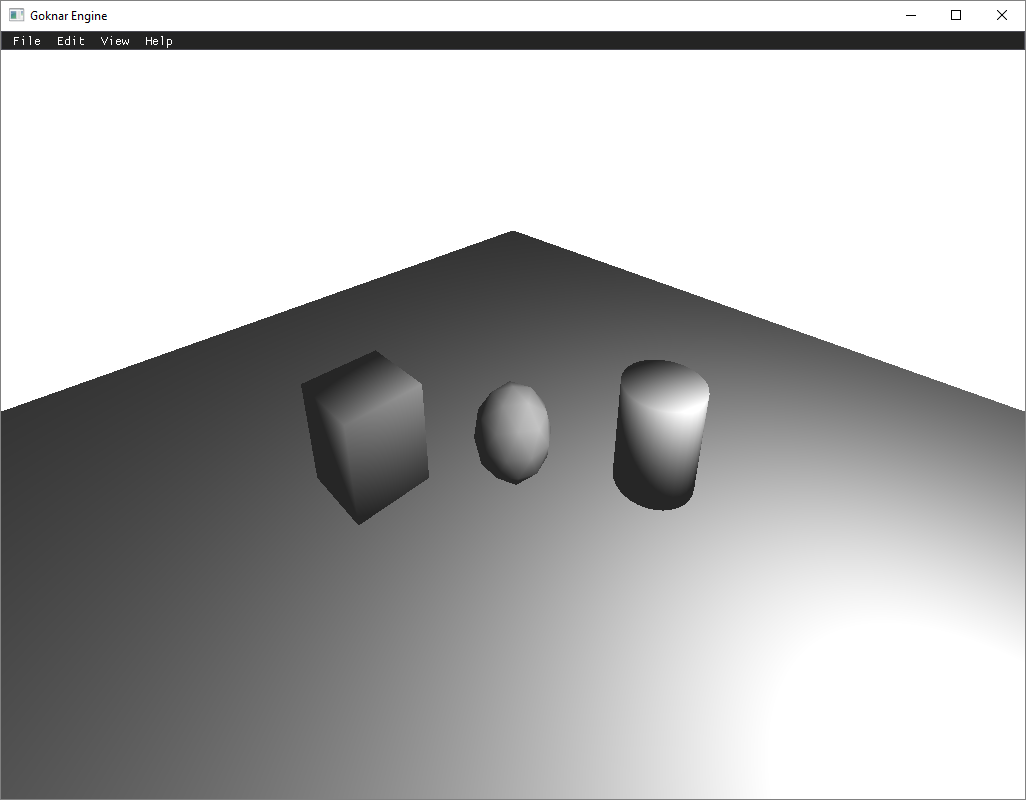

In this part of the series I explained how I implemented the Camera, Renderer, Mesh, and Shader classes, corresponding transformation matrices and functions.

Camera

For the camera implementation it is needed to add some functions to Math and Vector3 classes.

In Math class a LookAt function is added instead of using glu library because I don’t want to be too dependent of other libraries, and I already have vector and matrix classes implemented. Therefore there is no need to include another library for a few functions of it. In LookAt function basically the view matrix -which will be explained in the following section- of the camera is calculated. I also added transformation operations(translation, rotation, and scaling) to Vector3 class -which is not necessary only for camera implementation-.

In Camera class, every necessary function and variable are being held such as position, forward, upward, and right vectors, near, and far distances and so on. Also 3 rotation functions (yaw, pitch, and roll) are added. In camera implementation, maybe the most important concept is view and projection matrices; therefore, it is better to have another section for explaining them.

View and Projection Matrices

View matrix of a camera is used to bring every vertex from world space to view space, i.e. every vertex is transformed according to the view matrix. You may think that moving or rotating the camera is not related with the objects because camera is transformed not the objects, but this is not the case in computer graphics. In CG there is no such thing as rotating or moving the camera, only vertices are movable and rotatable. The object we call “camera” is only an object that holds a view and a projection matrix, i.e. when we transform our view, every object in the scene is transformed but the camera stays the same with all properties of it.

As we all know, we have screens in 2D space, but our scenes are all 3D, and we need to calculate which fragment(pixel-to-be) projects where on the screen. For this purpose there exists a matrix, called projection matrix. In computer graphics there are two different projection type which are perspective and orthographic projections. In orthographic projection, closer or further objects have the same scale as depth is not important. A box in a scene in front of the camera by 1 meter is equal sized with the same box placed 1 kilometer away from the camera. Orthographic projection is generally used in 2D and isometric games or some professional areas such as architecture. On the other hand, in perspective projection, the projection of the 3D world is like how we see the real world with our eyes, closer objects seem bigger and their projection is reduced in size as they go further away.

Indeed, these projection types are very easy to implement in computer graphics. We only need 6 variables of the viewport which are coordinates of the left, right, top and bottom edges of it, and distances of near and far planes. Near plane is the closest distance that can be projected and the far plane is the furthest one. Only fragments between these distances are seen in the screen.

/*

Math.cpp

*/

void Math::LookAt(Matrix& viewingMatrix, const Vector3& position, const Vector3& target, const Vector3& upVector)

{

Vector3 forward = position - target;

forward.Normalize();

Vector3 left = upVector.Cross(forward);

left.Normalize();

Vector3 up = forward.Cross(left);

viewingMatrix = Matrix::IdentityMatrix;

viewingMatrix[0] = left.x;

viewingMatrix[4] = left.y;

viewingMatrix[8] = left.z;

viewingMatrix[1] = up.x;

viewingMatrix[5] = up.y;

viewingMatrix[9] = up.z;

viewingMatrix[2] = forward.x;

viewingMatrix[6] = forward.y;

viewingMatrix[10] = forward.z;

viewingMatrix[12] = -left.x * position.x - left.y * position.y - left.z * position.z;

viewingMatrix[13] = -up.x * position.x - up.y * position.y - up.z * position.z;

viewingMatrix[14] = -forward.x * position.x - forward.y * position.y - forward.z * position.z;

}/*

Transformation operations of Vector3 class

*/

Vector3 Vector3::Translate(const Vector3& translation)

{

Matrix translateMatrix = Matrix::IdentityMatrix;

translateMatrix.m[3] = translation.x;

translateMatrix.m[7] = translation.y;

translateMatrix.m[11] = translation.z;

return Vector3(translateMatrix * Vector4(translation));

}

Vector3 Vector3::Rotate(const Vector3& rotation)

{

Vector3 result = *this;

if (rotation.x != 0)

{

Matrix rotateMatrix = Matrix::IdentityMatrix;

float cosTheta = cos(rotation.x);

float sinTheta = sin(rotation.x);

rotateMatrix.m[5] = cosTheta;

rotateMatrix.m[6] = -sinTheta;

rotateMatrix.m[9] = sinTheta;

rotateMatrix.m[10] = cosTheta;

result = Vector3(rotateMatrix * Vector4(result));

}

if (rotation.y != 0)

{

Matrix rotateMatrix = Matrix::IdentityMatrix;

float cosTheta = cos(rotation.y);

float sinTheta = sin(rotation.y);

rotateMatrix.m[0] = cosTheta;

rotateMatrix.m[2] = sinTheta;

rotateMatrix.m[8] = -sinTheta;

rotateMatrix.m[10] = cosTheta;

result = Vector3(rotateMatrix * Vector4(result));

}

if (rotation.z != 0)

{

Matrix rotateMatrix = Matrix::IdentityMatrix;

float cosTheta = cos(rotation.z);

float sinTheta = sin(rotation.z);

rotateMatrix.m[0] = cosTheta;

rotateMatrix.m[1] = -sinTheta;

rotateMatrix.m[4] = sinTheta;

rotateMatrix.m[5] = cosTheta;

result = Vector3(rotateMatrix * Vector4(result));

}

return result;

}

Vector3 Vector3::Scale(const Vector3& scale)

{

Matrix scaleMatrix = Matrix::IdentityMatrix;

scaleMatrix.m[0] = scale.x;

scaleMatrix.m[5] = scale.y;

scaleMatrix.m[10] = scale.z;

return Vector3(scaleMatrix * Vector4(*this));

}/*

Camera.h

*/

#ifndef __CAMERA_H__

#define __CAMERA_H__

#include "Goknar/Core.h"

#include "Goknar/Matrix.h"

enum class GOKNAR_API CameraType : unsigned char

{

Orthographic,

Perspective

};

class GOKNAR_API Camera

{

public:

Camera() :

viewingMatrix_(Matrix::IdentityMatrix),

position_(Vector3::ZeroVector),

forwardVector_(Vector3::ForwardVector),

rightVector_(Vector3::RightVector),

upVector_(Vector3::UpVector),

nearPlane_(0.1f),

nearDistance_(0.1f),

farDistance_(100.f),

imageWidth_(1024),

imageHeight_(768),

type_(CameraType::Perspective)

{

SetProjectionMatrix();

LookAt();

}

Camera(const Vector3& position, const Vector3& forward, const Vector3& up);

Camera(const Camera* rhs)

{

if (rhs != this)

{

viewingMatrix_ = rhs->viewingMatrix_;

position_ = rhs->position_;

forwardVector_ = rhs->forwardVector_;

upVector_ = rhs->upVector_;

rightVector_ = rhs->rightVector_;

nearPlane_ = rhs->nearPlane_;

nearDistance_ = rhs->nearDistance_;

farDistance_ = rhs->farDistance_;

imageWidth_ = rhs->imageWidth_;

imageHeight_ = rhs->imageHeight_;

type_ = rhs->type_;

projectionMatrix_ = rhs->projectionMatrix_;

viewingMatrix_ = rhs->viewingMatrix_;

imageWidth_ = rhs->imageWidth_;

imageHeight_ = rhs->imageHeight_;

type_ = rhs->type_;

}

}

void InitCamera();

void MoveForward(float value);

void Yaw(float value);

void Pitch(float value);

void Roll(float value);

/* GETTER/SETTER */

void InitMatrices()

{

LookAt();

SetProjectionMatrix();

}

void SetProjectionMatrix()

{

float l = nearPlane_.x;

float r = nearPlane_.y;

float b = nearPlane_.z;

float t = nearPlane_.w;

// Set the projection matrix as it is orthographic

projectionMatrix_ = Matrix( 2 / (r - l), 0.f, 0.f, -(r + l) / (r - l),

0.f, 2 / (t - b), 0.f, -(t + b) / (t - b),

0.f, 0.f, -2 / (farDistance_ - nearDistance_), -(farDistance_ + nearDistance_) / (farDistance_ - nearDistance_),

0.f, 0.f, 0.f, 1.f);

if (type_ == CameraType::Perspective)

{

// Orthographic to perspective conversion matrix

Matrix o2p(nearDistance_, 0, 0, 0,

0, nearDistance_, 0, 0,

0, 0, farDistance_ + nearDistance_, farDistance_ * nearDistance_,

0, 0, -1, 0);

projectionMatrix_ = projectionMatrix_ * o2p;

}

}

float* GetViewingMatrixPointer()

{

return &viewingMatrix_[0];

}

float* GetProjectionMatrixPointer()

{

return &projectionMatrix_[0];

}

protected:

private:

void LookAt();

Matrix viewingMatrix_;

Matrix projectionMatrix_;

// Left Right Bottom Top

Vector4 nearPlane_;

Vector3 position_;

Vector3 forwardVector_;

Vector3 upVector_;

Vector3 rightVector_;

float nearDistance_;

float farDistance_;

int imageWidth_, imageHeight_;

CameraType type_;

};

#endif/*

Camera.cpp

*/

#include "pch.h"

#include "Camera.h"

Camera::Camera(const Vector3& position, const Vector3& gaze, const Vector3& up) :

position_(position),

forwardVector_(gaze),

upVector_(up),

rightVector_(forwardVector_.Cross(upVector_)),

type_(CameraType::Perspective)

{

SetProjectionMatrix();

LookAt();

}

void Camera::InitCamera()

{

float forwardDotUp = Vector3::Dot(forwardVector_, upVector_);

if (-EPSILON <= forwardDotUp && forwardDotUp <= EPSILON)

{

rightVector_ = forwardVector_.Cross(upVector_);

upVector_ = rightVector_.Cross(forwardVector_);

}

LookAt();

}

void Camera::MoveForward(float value)

{

position_ += forwardVector_ * value;

LookAt();

}

void Camera::Yaw(float value)

{

forwardVector_ = forwardVector_.Rotate(upVector_ * value);

rightVector_ = forwardVector_.Cross(upVector_);

LookAt();

}

void Camera::Pitch(float value)

{

forwardVector_ = forwardVector_.Rotate(rightVector_ * value);

upVector_ = rightVector_.Cross(forwardVector_);

LookAt();

}

void Camera::Roll(float value)

{

rightVector_ = rightVector_.Rotate(forwardVector_ * value);

upVector_ = rightVector_.Cross(forwardVector_);

LookAt();

}

void Camera::LookAt()

{

Vector3 lookAtPos = position_ + forwardVector_ * nearDistance_;

Math::LookAt(viewingMatrix_,

position_,

lookAtPos,

upVector_);

}Shader Class

I implemented a basic shader class where shader binding/unbinding, uniform setting operations etc. are done.

#ifndef __SHADER_H__

#define __SHADER_H__

#include "Math.h"

class Shader

{

public:

Shader();

Shader(const char* vertexShader, const char* fragmentShader);

~Shader();

void Bind() const;

void Unbind() const;

unsigned int GetProgramId() const

{

return programId_;

}

void Use() const;

void SetBool(const char* name, bool value);

void SetInt(const char* name, int value) const;

void SetFloat(const char* name, float value) const;

void SetMatrix(const char* name, const Matrix& matrix) const;

void SetVector3(const char* name, const Vector3& vector) const;

protected:

private:

unsigned int programId_;

};

#endif/*

Shader.cpp

*/

#include "pch.h"

#include "Matrix.h"

#include "Shader.h"

#include "Log.h"

#include <glad/glad.h>

void ExitOnShaderIsNotCompiled(GLuint shaderId, const char* errorMessage)

{

GLint isCompiled = 0;

glGetShaderiv(shaderId, GL_COMPILE_STATUS, &isCompiled);

if (isCompiled == GL_FALSE)

{

GLint maxLength = 0;

glGetShaderiv(shaderId, GL_INFO_LOG_LENGTH, &maxLength);

GLchar* logMessage = new GLchar[maxLength + 1];

glGetShaderInfoLog(shaderId, maxLength, &maxLength, logMessage);

logMessage[maxLength] = '\0';

glDeleteShader(shaderId);

GOKNAR_CORE_ERROR("{0}", logMessage);

GOKNAR_ASSERT(false, errorMessage);

delete[] logMessage;

}

}

void ExitOnProgramError(GLuint programId, const char* errorMessage)

{

GLint isLinked = 0;

glGetProgramiv(programId, GL_LINK_STATUS, &isLinked);

if (isLinked == GL_FALSE)

{

GLint maxLength = 0;

glGetShaderiv(programId, GL_INFO_LOG_LENGTH, &maxLength);

GLchar* logMessage = new GLchar[maxLength + 1];

glGetProgramInfoLog(programId, maxLength, &maxLength, logMessage);

logMessage[maxLength] = '\0';

glDeleteProgram(programId);

GOKNAR_CORE_ERROR("{0}", logMessage);

delete[] logMessage;

}

}

Shader::Shader()

{

}

Shader::Shader(const char* vertexShaderSource, const char* fragmentShaderSource)

{

const GLchar* vertexSource = (const GLchar*)vertexShaderSource;

GLuint vertexShaderId = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShaderId, 1, &vertexSource, 0);

glCompileShader(vertexShaderId);

ExitOnShaderIsNotCompiled(vertexShaderId, "Vertex shader compilation error!");

const GLchar* fragmentSource = (const GLchar*)fragmentShaderSource;

GLuint fragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShaderId, 1, &fragmentSource, 0);

glCompileShader(fragmentShaderId);

ExitOnShaderIsNotCompiled(fragmentShaderId, "Fragment shader compilation error!");

programId_ = glCreateProgram();

glAttachShader(programId_, vertexShaderId);

glAttachShader(programId_, fragmentShaderId);

glLinkProgram(programId_);

ExitOnProgramError(programId_, "Shader program link error!");

glDetachShader(programId_, vertexShaderId);

glDetachShader(programId_, fragmentShaderId);

}

Shader::~Shader()

{

glDeleteProgram(programId_);

}

void Shader::Bind() const

{

glUseProgram(programId_);

}

void Shader::Unbind() const

{

glUseProgram(0);

}

void Shader::Use() const

{

glUseProgram(programId_);

}

void Shader::SetBool(const char* name, bool value)

{

glUniform1i(glGetUniformLocation(programId_, name), (int)value);

}

void Shader::SetInt(const char* name, int value) const

{

glUniform1i(glGetUniformLocation(programId_, name), value);

}

void Shader::SetFloat(const char* name, float value) const

{

glUniform1f(glGetUniformLocation(programId_, name), value);

}

void Shader::SetMatrix(const char* name, const Matrix& matrix) const

{

glUniformMatrix4fv(glGetUniformLocation(programId_, name), 1, GL_FALSE, &matrix[0]);

}

void Shader::SetVector3(const char* name, const Vector3& vector) const

{

glUniform3fv(glGetUniformLocation(programId_, name), 1, &vector.x);

}Mesh

Mesh class is the base class rendered by the renderer. It consists of two fundamental classes, Face and VertexData where Face is simply a container holding vertex indices which creates a face together, and VertexData class holds every property of a vertex: its position, normal, and UV.

Just for now, vertex and fragment shaders are assigned as strings. This will be changed in the upcoming article and the shaders will be generated by a class named ShaderBuilder.

/*

Mesh.h

*/

#ifndef __MESH_H__

#define __MESH_H__

#include "Goknar/Core.h"

#include "Goknar/Math.h"

#include "Goknar/Matrix.h"

#include "glad/glad.h"

#include <vector>

class Shader;

class GOKNAR_API Face

{

public:

unsigned int vertexIndices[3];

};

class GOKNAR_API VertexData

{

public:

VertexData() : position(Vector3::ZeroVector), normal(Vector3::ZeroVector), uv(Vector2::ZeroVector) { }

VertexData(const Vector3& p) : position(p), normal(Vector3::ZeroVector), uv(Vector2::ZeroVector) { }

VertexData(const Vector3& pos, const Vector3& n) : position(pos), normal(n), uv(Vector2::ZeroVector) { }

VertexData(const Vector3& pos, const Vector3& n, const Vector2& uvCoord) : position(pos), normal(n), uv(uvCoord) { }

Vector3 position;

Vector3 normal;

Vector2 uv;

};

typedef std::vector<VertexData> VertexArray;

typedef std::vector<Face> FaceArray;

class GOKNAR_API Mesh

{

public:

Mesh();

~Mesh();

void Init();

void SetMaterialId(int materialId)

{

materialId_ = materialId;

}

void AddVertex(const Vector3& vertex)

{

vertices_->push_back(VertexData(vertex));

}

void SetVertexNormal(int index, const Vector3& n)

{

vertices_->at(index).normal = n;

}

const VertexArray* GetVerticesPointer() const

{

return vertices_;

}

void AddFace(const Face& face)

{

faces_->push_back(face);

}

const FaceArray* GetFacesPointer() const

{

return faces_;

}

const Shader* GetShader() const

{

return shader_;

}

int GetVertexCount() const

{

return vertexCount_;

}

int GetFaceCount() const

{

return faceCount_;

}

void Render() const;

private:

Matrix modelMatrix_;

VertexArray* vertices_;

FaceArray* faces_;

Shader* shader_;

unsigned int vertexCount_;

unsigned int faceCount_;

int materialId_;

};

#endif/*

Mesh.cpp

*/

#include "pch.h"

#include "Mesh.h"

#include "Goknar/Camera.h"

#include "Goknar/Engine.h"

#include "Goknar/Material.h"

#include "Goknar/Scene.h"

#include "Goknar/Shader.h"

#include "Managers/CameraManager.h"

#include "Managers/ShaderBuilder.h"

Mesh::Mesh() :

modelMatrix_(Matrix::IdentityMatrix),

materialId_(0),

shader_(0),

vertexCount_(0),

faceCount_(0)

{

vertices_ = new VertexArray();

faces_ = new FaceArray();

engine->AddObjectToRenderer(this);

}

Mesh::~Mesh()

{

if (vertices_) delete[] vertices_;

if (faces_) delete[] faces_;

}

void Mesh::Init()

{

vertexCount_ = (int)vertices_->size();

faceCount_ = (int)faces_->size();

const char* vertexBuffer =

R"(

#version 330 core

layout(location = 0) in vec3 position;

layout(location = 1) in vec3 normal;

uniform mat4 MVP;

uniform mat4 modelMatrix;

out vec3 fragmentPosition;

out vec3 vertexNormal;

void main()

{

gl_Position = MVP * vec4(position, 1.f);

vertexNormal = mat3(transpose(inverse(modelMatrix))) * normal;

fragmentPosition = vec3(modelMatrix * vec4(position, 1.f));

}

)";

const char* fragmentBuffer =

R"(

#version 330

out vec4 color;

in vec3 fragmentPosition;

uniform vec3 pointLightPosition;

uniform vec3 pointLightIntensity;

uniform vec3 viewPosition;

in vec3 vertexNormal;

void main()

{

// Ambient

vec3 ambient = vec3(0.15f, 0.15f, 0.15f);

// Diffuse

vec3 diffuseReflectance = vec3(1.f, 1.f, 1.f);

vec3 wi = pointLightPosition - fragmentPosition;

float wiLength = length(wi);

wi /= wiLength;

float cosThetaPrime = max(0.f, dot(wi, vertexNormal));

vec3 diffuse = diffuseReflectance * cosThetaPrime * pointLightIntensity / (wiLength * wiLength);

// Specular

vec3 specularReflectance = vec3(1000.f, 1000.f, 1000.f);

float phongExponent = 50.f;

vec3 wo = viewPosition - fragmentPosition;

float woLength = length(wo);

wo /= woLength;

vec3 halfVector = (wi + wo) / (wiLength + woLength);

float cosAlphaPrimeToThePowerOfPhongExponent = pow(max(0, dot(vertexNormal, halfVector)), phongExponent);

vec3 specular = specularReflectance * cosAlphaPrimeToThePowerOfPhongExponent;

color = vec4((ambient + diffuse + specular), 1.f);

}

)";

shader_ = new Shader(vertexBuffer, fragmentBuffer);

}

void Mesh::Render() const

{

Camera* activeCamera = engine->GetCameraManager()->GetActiveCamera();

Matrix MVP = activeCamera->GetViewingMatrix() * activeCamera->GetProjectionMatrix();

MVP = MVP * modelMatrix_;

shader_->SetMatrix("MVP", &MVP[0]);

shader_->SetMatrix("modelMatrix", &modelMatrix_[0]);

static float theta = 0.f;

static float radius = 10.f;

theta += 0.00025f;

Vector3 pointLightPosition = Vector3(radius * cos(theta), radius * sin(theta), 10.f);

shader_->SetVector3("pointLightPosition", &pointLightPosition.x);

Vector3 pointLightIntensity(100.f, 100.f, 100.f);

shader_->SetVector3("pointLightIntensity", &pointLightIntensity.x);

const Vector3& cameraPosition = engine->GetCameraManager()->GetActiveCamera()->GetPosition();

shader_->SetVector3("viewPosition", &cameraPosition.x);

shader_->SetVector3("ASDASDASD", &cameraPosition.x);

}

Renderer

I explained the basic Renderer class in the previous article. In this part of the project, I added buffer data setter and render functions.

Rendering Multiple Meshes

In Init function I sum-up every mesh’s vertex and face count and in SetBufferData function vertex and index buffers are generated and bound using the calculated vertex and face counts. Then, each mesh information is transferred into the buffer using glBufferSubData.

In Render function, glDrawElementsBaseVertex function of OpenGL is used to render every object with their own vertex indices. Because every vertex stacked into the buffer continuously it would be needed to add up index count of every object to the next one if we use glDrawElements, but glDrawElementsBaseVertex redeem us from this limitation.

/*

Renderer.h

*/

#ifndef __RENDERER_H__

#define __RENDERER_H__

#include "Goknar/Core.h"

#include "glad/glad.h"

#include <vector>

class GOKNAR_API Mesh;

class GOKNAR_API Renderer

{

public:

Renderer();

~Renderer();

void SetBufferData();

void Init();

void Render();

void AddObjectToRenderer(Mesh* object);

private:

std::vector<Mesh*> objectsToBeRendered_;

unsigned int totalVertexSize_;

unsigned int totalFaceSize_;

GLuint vertexBufferId_;

GLuint indexBufferId_;

};

#endif

/*

Renderer.cpp

*/

Renderer::~Renderer()

{

glDisableVertexAttribArray(0);

glDisableVertexAttribArray(1);

glDisableVertexAttribArray(2);

glDeleteBuffers(1, &vertexBufferId_);

glDeleteBuffers(1, &indexBufferId_);

}

void Renderer::SetBufferData()

{

/*

Vertex buffer

*/

unsigned int sizeOfVertexData = sizeof(VertexData);

glGenBuffers(1, &vertexBufferId_);

glBindBuffer(GL_ARRAY_BUFFER, vertexBufferId_);

glBufferData(GL_ARRAY_BUFFER, totalVertexSize_ * sizeOfVertexData, nullptr, GL_STATIC_DRAW);

/*

Index buffer

*/

glGenBuffers(1, &indexBufferId_);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexBufferId_);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, totalFaceSize_ * sizeof(Face), nullptr, GL_STATIC_DRAW);

/*

Buffer Sub-Data

*/

int vertexStartIndex = 0;

int faceStartIndex = 0;

for (const Mesh* mesh : objectsToBeRendered_)

{

const VertexArray* vertexArrayPtr = mesh->GetVerticesPointer();

int vertexSizeInBytes = vertexArrayPtr->size() * sizeof(vertexArrayPtr->at(0));

glBufferSubData(GL_ARRAY_BUFFER, vertexStartIndex, vertexSizeInBytes, &vertexArrayPtr->at(0));

const FaceArray* faceArrayPtr = mesh->GetFacesPointer();

int faceSizeInBytes = faceArrayPtr->size() * sizeof(faceArrayPtr->at(0));

glBufferSubData(GL_ELEMENT_ARRAY_BUFFER, faceStartIndex, faceSizeInBytes, &faceArrayPtr->at(0));

vertexStartIndex += vertexSizeInBytes;

faceStartIndex += faceSizeInBytes;

}

// Vertex position

int offset = 0;

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, sizeOfVertexData, (void*)offset);

// Vertex normal

offset += sizeof(VertexData::position);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, sizeOfVertexData, (void*)offset);

// Vertex UV

offset += sizeof(VertexData::normal);

glEnableVertexAttribArray(2);

glVertexAttribPointer(2, 2, GL_FLOAT, GL_FALSE, sizeOfVertexData, (void*)offset);

}

void Renderer::Init()

{

glEnable(GL_DEPTH_TEST);

glEnable(GL_CULL_FACE);

glDepthFunc(GL_LEQUAL);

for (Mesh* mesh : objectsToBeRendered_)

{

totalVertexSize_ += mesh->GetVerticesPointer()->size();

totalFaceSize_ += mesh->GetFacesPointer()->size();

mesh->Init();

}

SetBufferData();

}

void Renderer::Render()

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

const Colorf& sceneBackgroundColor = engine->GetApplication()->GetMainScene()->GetBackgroundColor();

glClearColor(sceneBackgroundColor.r, sceneBackgroundColor.g, sceneBackgroundColor.b, 1.f);

int vertexStartingIndex = 0;

int baseVertex = 0;

for (const Mesh* mesh : objectsToBeRendered_)

{

mesh->GetShader()->Use();

mesh->Render();

int facePointCount = mesh->GetFaceCount() * 3;

glDrawElementsBaseVertex(GL_TRIANGLES, facePointCount, GL_UNSIGNED_INT, (void*)vertexStartingIndex, baseVertex);

vertexStartingIndex += facePointCount * sizeof(Face::vertexIndices[0]);

baseVertex += mesh->GetVertexCount();

}

}

void Renderer::AddObjectToRenderer(Mesh*object)

{

objectsToBeRendered_.push_back(object);

}

You can check the GitHub repository for source files.

I, now, added a project for Goknar Engine in GitHub. You can follow status of the project there.

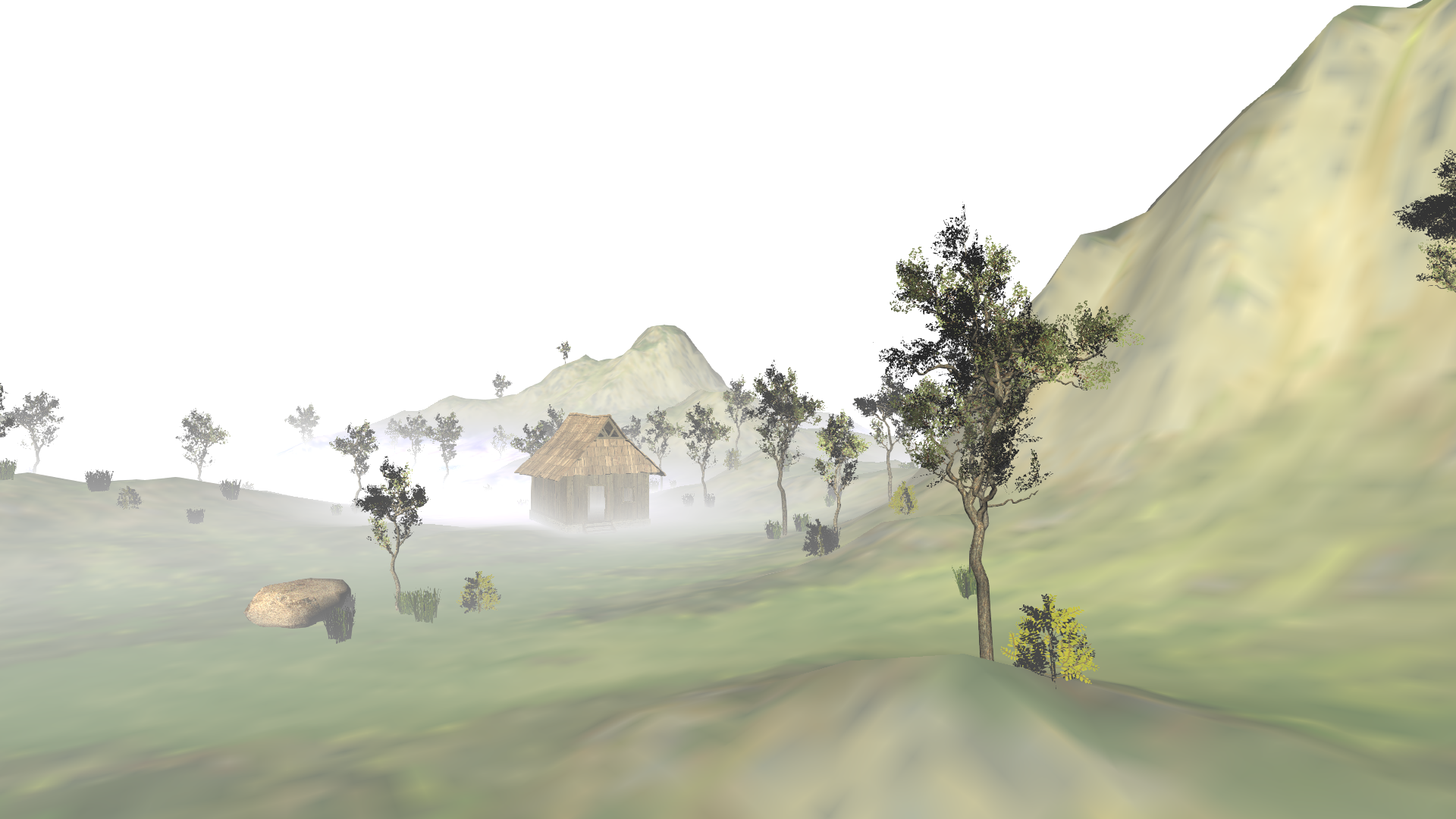

In the next article I will talk about static/dynamic lighting implementations. Until then, take care 🙂